by Greg Scott | Aug 10, 2024 | Cybersecurity

DCIM\100MEDIA\DJI_0141.JPG – from Jan Wildeboer’s camera I liked what Jan Wildeboer had to say about the xz-utils and Crowdstrike incidents so much, I asked Jan’s permission to paste it as a guest blog post right here. I made one minor formatting...

by Greg Scott | Jul 22, 2024 | Phish collection

At first, I really thought this grazed but not fazed email came from the Trump team. But this one did not come from Trump or the Republicans. The attempt on Trump’s life was wrong and it robbed an innocent family of their father. But this email cracked me up...

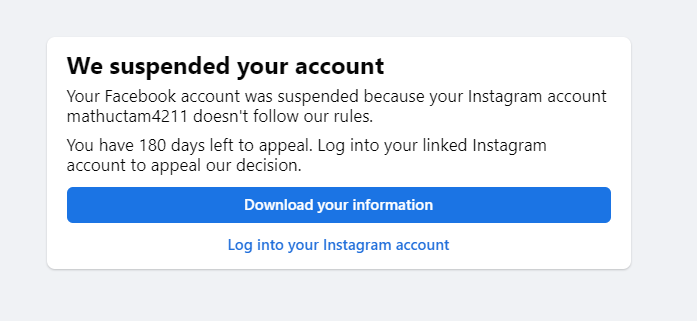

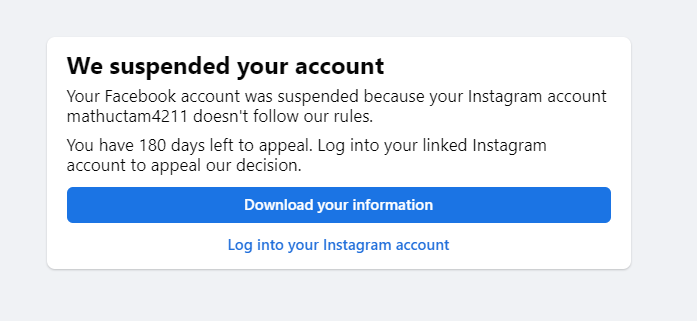

by Greg Scott | Jul 1, 2024 | Cybersecurity, Slice of Life

I started using Facebook back in 2009. A few friends greeted me. It was fun. I connected with my sister, T, and her family in Idaho and we’ve traded hundreds, or maybe thousands of messages since then. T was 11 when I was born. She spent much of her teenage...

by Greg Scott | Jun 28, 2024 | Cybersecurity

Like nearly all sensational data breaches, the public will probably never know the root cause of the Great United Health Group data breach of 2024 that exposed medical information for millions and millions of Americans. But after following data breaches for a long...

by Greg Scott | May 20, 2024 | Slice of Life, Technology

For the latest in big company insanity, look to the recent Dell scheme to get rid of employees who work remotely. I snapped this picture while fighting rush-hour traffic in May, 2004. Now, Dell employees can look forward to more traffic fun. Dell has undergone quite...

by Greg Scott | May 5, 2024 | Phish collection, Slice of Life

Somebody generated an accurate credit report for me and used it in a phone phishing scam. They put on a worthy effort. My phone rang at 2:09 PM on Thursday, May 2, 2024. The Caller ID said “CHASE,” all uppercase, with phone number 800-955-9060. The caller,...

by Greg Scott | Apr 22, 2024 | Phish collection

Today’s authentication state of the art uses two of a few possible factors identify us. We call this Two-factor authentication (2FA). The possible factors include: Something you know – for example, a password. Something you have – maybe a cell phone...

by Greg Scott | Mar 14, 2024 | Phish collection

ICANN – the Internet Corporation for Assigned Names and Numbers – keeps a registry of names and contact information for every internet domain name. ICANN periodically sends reminder emails like this one, and everyone who operates a website has seen them....

by Greg Scott | Feb 1, 2024 | Politics and Religion

Christian Nationalism isn’t new. I had just never heard the name before. But it’s been in front of my eyes for a long time. It explains behavior and choices I attributed to the lunatic fringe, and it fills in missing pieces understanding today’s...

by Greg Scott | Jan 16, 2024 | Phish collection

It’s a time-honored tradition to reserve the first spots on the year’s Greg Sustaining Membership roster for truly exceptional Patriots, and this year, I chose YOU. That’s right. I’m pleased to invite YOU to be one of the very first people to become a 2024...

Recent Comments